Transformers for 3D Object Detection in LiDAR Point Clouds

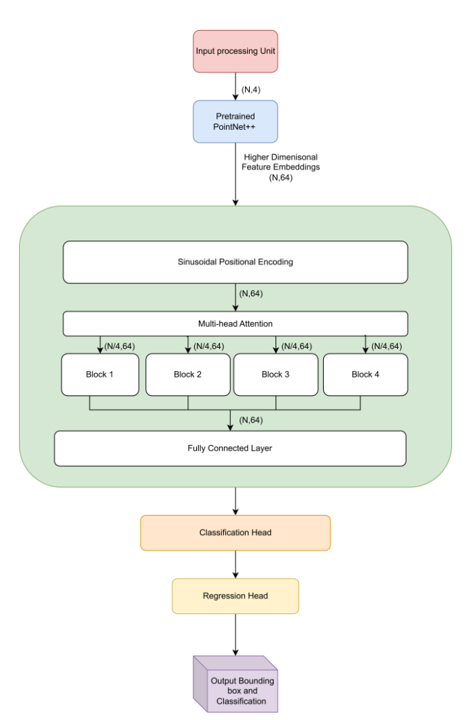

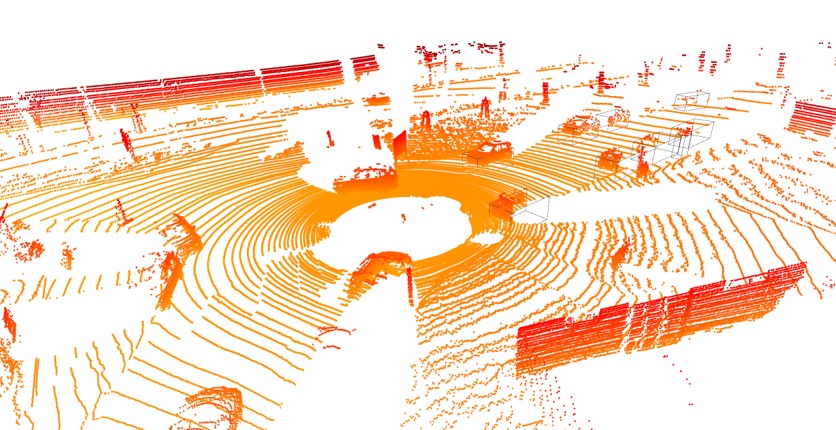

Overview: This project introduces a transformer-based 3D object detection framework for LiDAR point clouds, aimed at improving perception and navigation in autonomous vehicles. Unlike conventional CNN and PointNet-based models, our approach leverages self-attention mechanisms to effectively capture long-range dependencies and spatial correlations within sparse and irregular LiDAR data. A pretrained PointNet++ model is used for feature extraction, ensuring high-quality embeddings that are passed to transformer blocks for robust object detection.

GitHub Repository: View Source Code on GitHub

Key Features:

- Transformer-Based Detection: Self-attention mechanisms enable superior global feature learning in LiDAR point clouds.

- Pretrained PointNet++ for Feature Extraction: Eliminates the need for separate embedding network training.

- Efficient Data Processing: LiDAR point cloud data is processed in chunks to handle large-scale inputs.

- Custom Loss Function: Combines Smooth L1 Loss, IoU Loss, and Cross-Entropy Loss for precise object localization and classification.

- Modular Network Design: Implemented multiple architectures, scaling from basic embeddings to complex transformer-based solutions.

- Training on KITTI Dataset: Evaluated using Intersection over Union (IoU) and classification accuracy, achieving competitive results.

Results & Impact

Experiments demonstrated the effectiveness of transformers in capturing long-range dependencies for object detection in urban driving scenarios. The custom loss function improved object localization, and chunk-wise processing enhanced computational efficiency. Despite GPU constraints, the model showed promising results and outperformed traditional CNN-based approaches in feature alignment.

Technologies Used:

- Deep Learning:PyTorch, Transformers, PointNet++

- Optimization: AdamW, Custom Combined Loss (L1, IoU, Cross-Entropy), Open3D

- Dataset: KITTI

- Hardware: Lambda Labs cloud instances (GPU-based training)

This project contributes to advancing 3D object detection models for autonomous driving by leveraging transformers to enhance spatial reasoning in LiDAR data.