3D Reconstruction of an Autonomous Vehicle’s Environment using VecKM

Overview: This project focuses on 3D scene reconstruction for autonomous vehicle perception, leveraging the Vectorized Kernel Mixture (VecKM) method. VecKM, developed by Dehao Yuan, provides high efficiency, noise robustness, and superior local geometry encoding, making it ideal for processing LiDAR point cloud data. The project reconstructs autonomous vehicle environments from raw point clouds using deep learning-based feature extraction and kernel mixture encodings.

The model was evaluated using PCPNet and CARLA-simulated LiDAR data, demonstrating superior normal estimation accuracy, reduced computational costs, and improved perception for navigation in autonomous driving.

GitHub Repository: View Source Code on GitHub

Key Features:

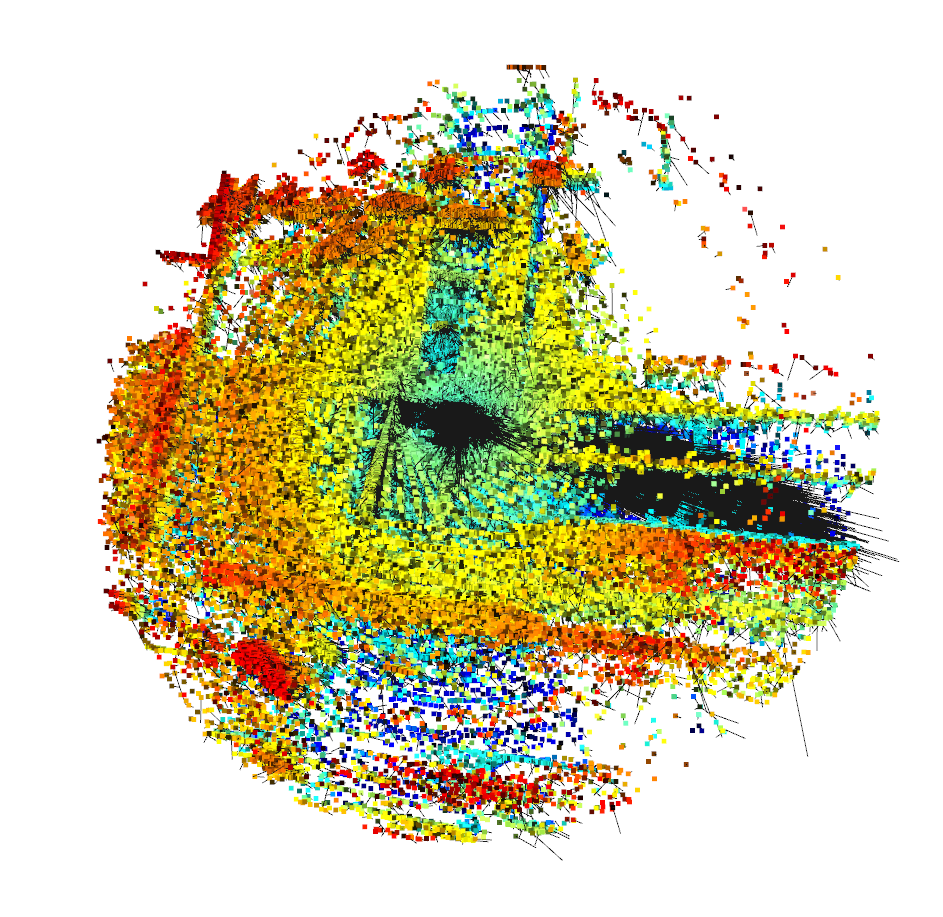

- VecKM-Based Feature Encoding: Efficiently extracts local geometric features from 3D point clouds, ensuring memory efficiency and fast processing.

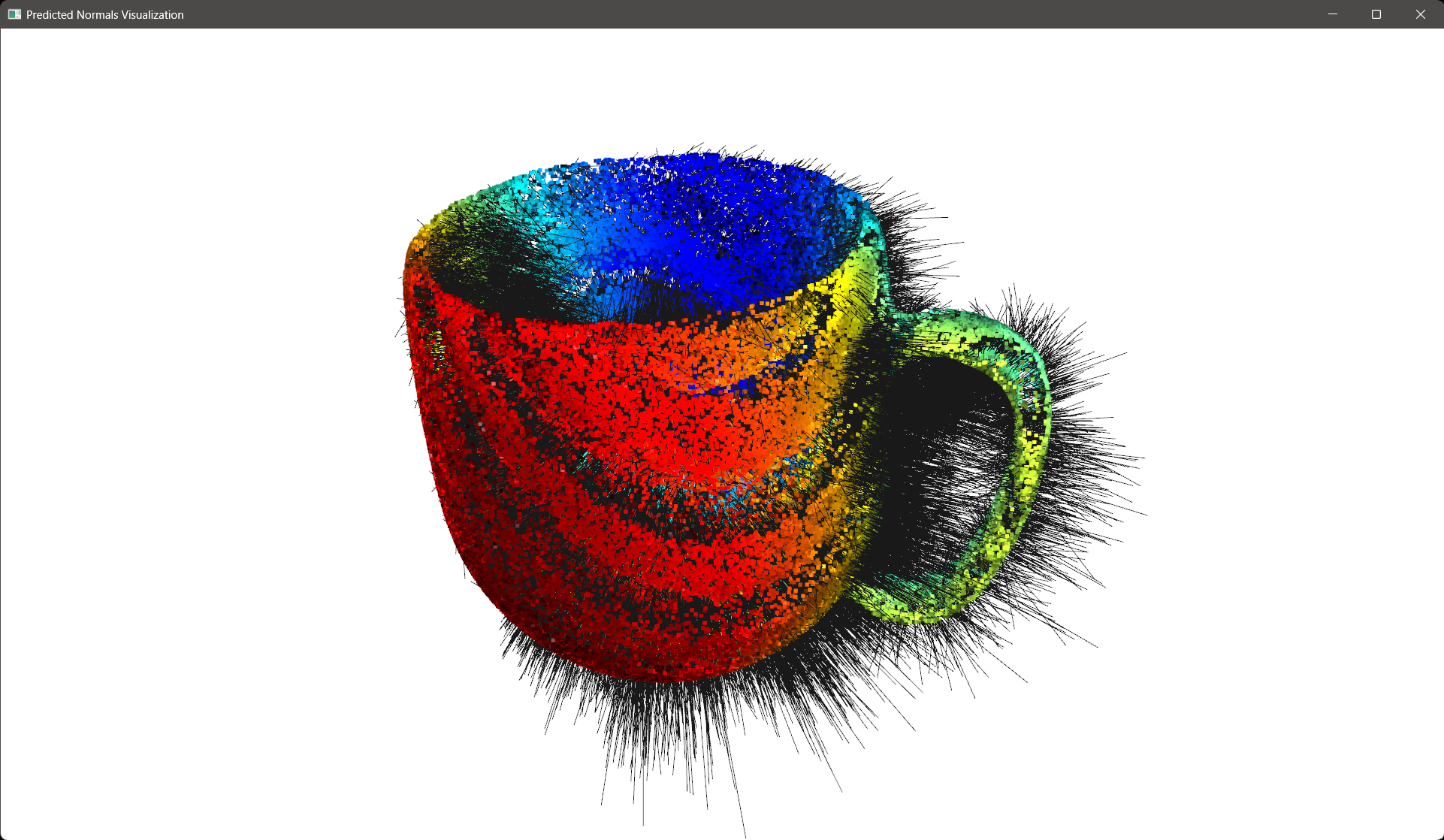

- Normal Estimation for Reconstruction: Predicts point cloud normals with high accuracy, enhancing surface reconstruction quality.

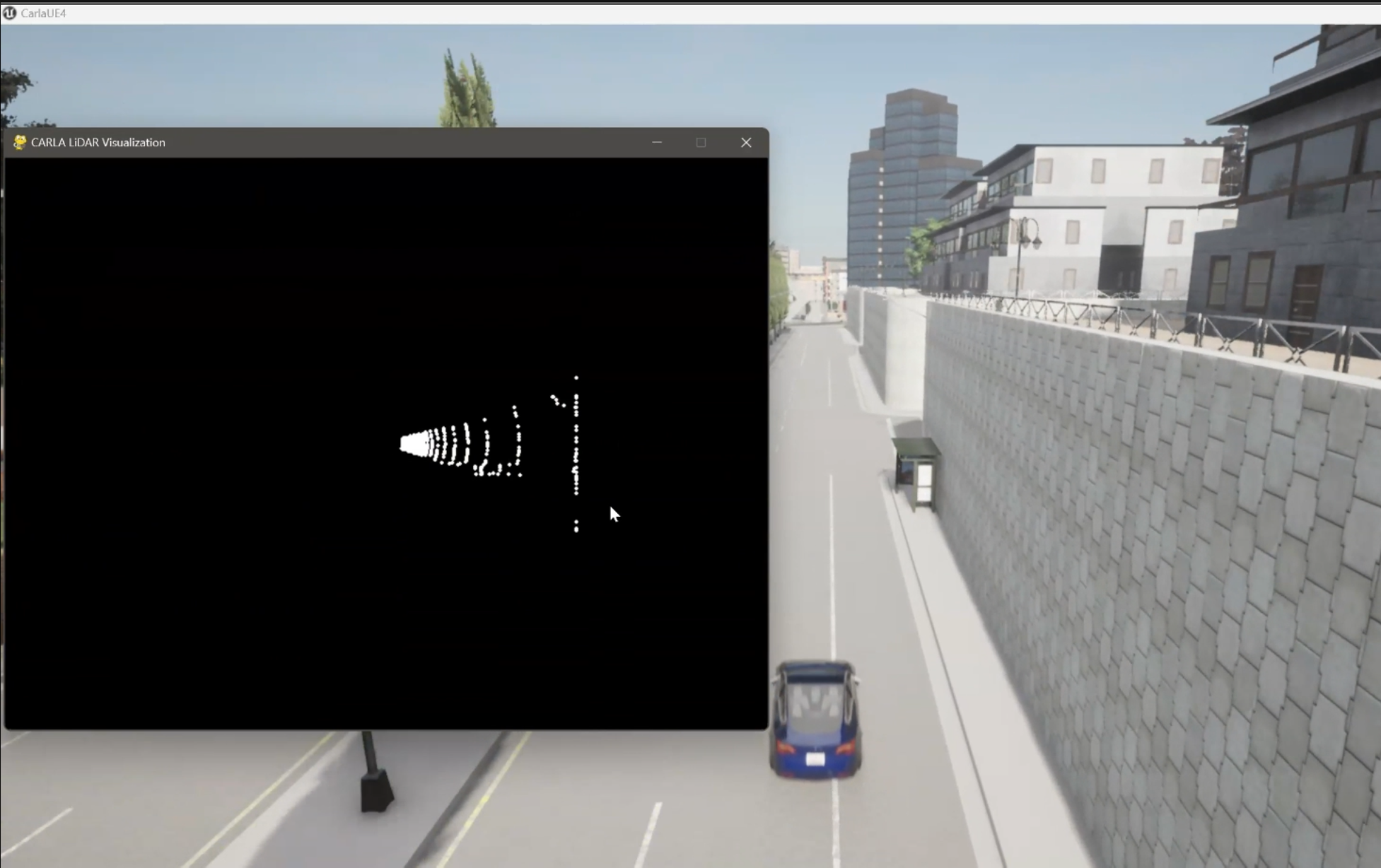

- CARLA Simulation Integration: Captures real-time point cloud data from a simulated autonomous vehicle environment, enabling practical evaluation.

- Poisson Surface Reconstruction: Converts predicted normals into a dense 3D surface representation, refining object shapes and improving perception.

- Robust to Noise and Density Variations: Demonstrates superior accuracy under challenging conditions, such as sparse or corrupted point clouds.

Results & Impact

Achieved high normal estimation accuracy on PCPNet dataset, validating VecKM’s robust feature extraction capabilities. Successfully reconstructed 3D surfaces from LiDAR point clouds using Poisson Surface Reconstruction, aligning closely with ground truth. Demonstrated real-time feasibility by integrating VecKM with CARLA-simulated LiDAR data, paving the way for real-world applications in self-driving vehicles.

Future Work:

- Extend VecKM integration to multi-sensor fusion, incorporating camera and LiDAR data for enhanced 3D perception.

- Optimize real-time processing speed for on-the-fly point cloud analysis in autonomous navigation.

- Scale the model to larger datasets like Waymo Open Dataset for real-world deployment in self-driving technologies.

Technologies Used:

- Machine Learning & Deep Learning: VecKM, PointNet++

- 3D Reconstruction: Poisson Surface Reconstruction, Normal Estimation

- Simulation & Data Collection: CARLA Autonomous Driving Simulator

- Optimization: Adam Optimizer, Root Mean Squared Error (RMSE) for evaluation

This project enhances 3D environment perception for autonomous vehicles, setting new benchmarks in efficient, noise-robust LiDAR-based scene reconstruction.

Video Presentation

Data collection in the CARLA simulation environment using an autonomous agent (car)